Dear Colleagues and Friends,

Four years ago, in June 2019, Management Science introduced a new Data and Code Disclosure Policy in order to assure the reproducibility of research published in the journal. A Code and Data Editor was appointed in April 2020. Under the new policy, all papers using code or data, and submitted after the introduction of the new policy, must provide replication materials which need to be approved by the Code and Data Editor.

The journal's Code and Data Editor, Ben Greiner, and his team of Associate Editor (Miloš Fišar, Christoph Huber, Ali Ozkes) just completed the review of their 600th replication package.

It is therefore a good opportunity to look back at the last three years and share some insights on what we have learnt since the policy was implemented. It is also an opportunity to update the community about the ongoing Management Science Reproducibility Project.

Update on Data and Code Disclosure Policy

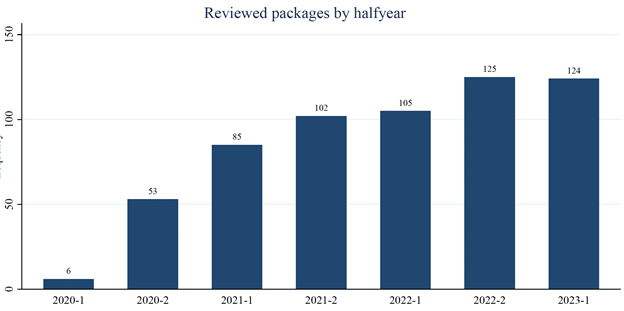

The number of reviewed replication packages has been steadily increasing, with about 250 packages just in the past 10 months. For context, in 2022 Management Science had 4200 submissions, and accepted 430 papers.

Reviewed papers come from all Management Science departments. About 60% of the reviewed articles are based on empirical data. About 20% report laboratory, online, field experiments or surveys, and another 20% are theory/simulation papers (typically only having code but not data).

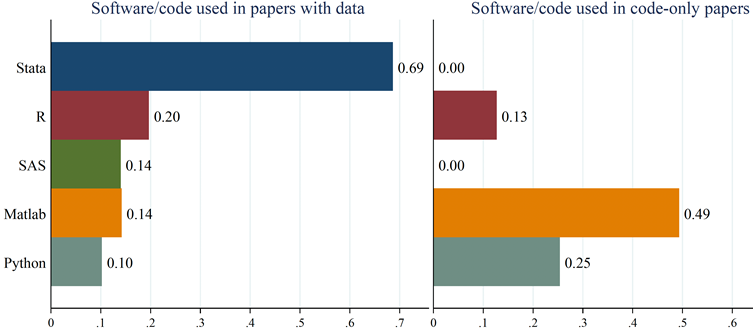

Among papers using datasets, 69% use Stata, and 20% use R. R is more popular than Stata with code-only papers, as is Matlab and Python, with a slight shift towards Python over time. However, across replication packages the reviewers encountered a large variety of statistical and computational tools, including, for example, Fortran, Gams, Gauss, Java, Mathematica, SPSS, SQL, Lingo, etc.

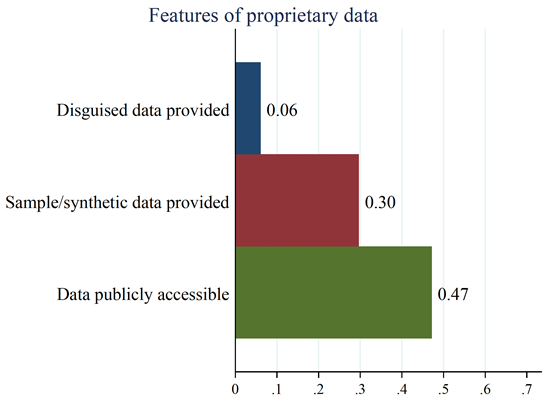

Among all papers that include data, 50% of the eventual replication packages include all data necessary to replicate, while the others rely on proprietary data. Among papers with proprietary data, 47% of the datasets are publicly accessible (e.g., via subscription services like WRDS or Compustat), and 30% of papers provide at least sample or synthetic data. Recently, for papers with proprietary data, the Code and Data Editor more often asked authors to include sample or synthetic data. This yielded more of these (35% in last year vs. 24% before) and was one of the reasons why we could run code tests for more packages (68% last year vs. 60% previously). Over all 3 years, the Code and Data review team could check whether code runs through without errors for 64% of all replication packages. However, they do not check soundness of methods or the actual results, though - this is left to the academic community.

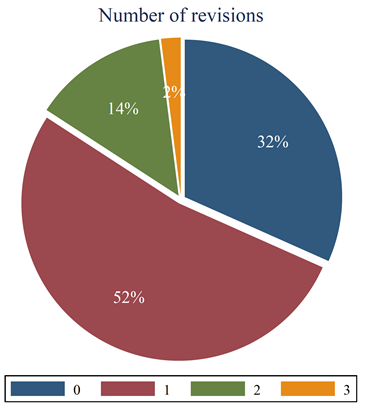

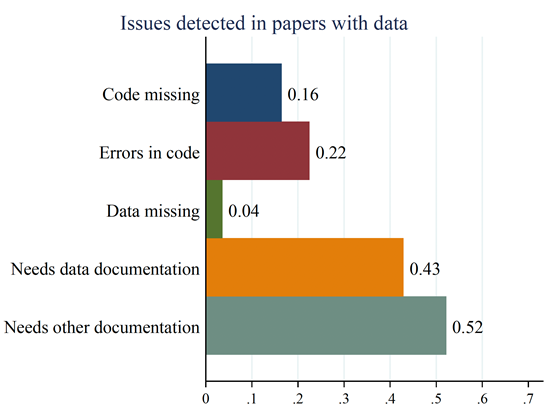

About a third of submitted replication materials could be accepted as is, without further revisions. Most replication packages needed one revision round, and about 16% needed 2 rounds or more until the replication package was approved. Most often, the Code and Data Editor had to ask for better documentation (variable dictionaries, dataset construction instructions, code documentation, etc.).

Sometimes parts of the code were missing. Code errors are not uncommon (given 64% of packages where code can be tested, 22% code errors imply that 34% of submitted and tested codes have issues). However, code reliability improved significantly over time. While in the first two years of code and data review, issues in code were detected in 38% of code-tested replication packages, this decreased to 28% in the past year.

The code/data review at ManSci needs about 27 days (median 16) from taking a paper into review to approving it. A paper spends about 12 days (median 7) with the Code and Data Editor and his team, and about 15 days (median 3) with authors for revisions of their replication materials. Due to the workload significantly increasing over time, though, while in the first 2 years the processing time was about 23 days, in the last year it was 30 days (2 more days with CDE, 5 more days with authors than previously). Also, a backlog has been built up that has not been processed yet.

In 5% of reviewed papers, during the revision of their replication materials, authors detected issues requiring changes in the paper. However, all these detected issues were minor (rounding errors, typos, etc.), and changes were approved by a Department Editor. This number improved to 4% in the last year, from 6% previously.

In sum, the Code and Data review at Management Science is active, but also faces challenges due to increased numbers of papers under review. We also see improvements over time, and authors seem to get used to provide replication packages with data, code, and documentation.

The Management Science Reproducibility Project

While the Code and Data Editor and his team checks replication packages for completeness, they do not have the capacity to reproduce the results reported in the paper. The objective of the Reproducibility Project is to assess reproducibility of articles published in Management Science before and after the 2019 policy change.

With Miloš Fišar, Ben Greiner, Christoph Huber, Elena Katok, and Ali Ozkes as project coordinators, the journal recruited 935 researchers to assist in this large-scale project. The team matched articles to reviewers based on department, software skills and data access. Depending on the paper, some were assigned two reviewers while others only a single reviewer.

So far, over 700 reviewers submitted their reports. They attempted to reproduce 490 papers published in Management Science, based on replication materials provided.

We are very excited and thankful for this broad community participation in this Management Science Reproducibility Project. The team is currently finishing the reproducibility reviews collection phase. Results will likely be finalized and reported in the summer of this year.

David Simchi-Levi<u1:p></u1:p>

Editor-in-Chief<u1:p></u1:p>

Management Science<u1:p></u1:p>

E-mail: mseic@mit.edu

<u1:p></u1:p><u1:p></u1:p>

------------------------------

David Simchi-Levi

Professor of Engineering Systems

Massachusetts Institute of Technology

Cambridge MA

------------------------------