Praveen Manimangalam,

Doctoral Researcher - Florida International University

Introduction: Why the Layer Model Breaks

For decades, enterprise AI has been depicted as the "Penthouse" atop a corporate skyscraper: a data-rich intelligence layer providing insights to the floors below. This "Layer Cake" model—Data → Process → Intelligence works for analytics and chatbots. However, it fundamentally breaks down with Agentic AI.

An agent doesn't just know; it acts. If we merely bolt on "agency" as another layer, we create brittle systems prone to State Divergence and Orphaned Actions. To harness autonomous capabilities safely, we must re-architect our thinking from a linear stack to a Closed-Loop Control System.

1. The Paradigm Shift: From GPS to Self-Driving Enterprise

To grasp this architectural imperative, consider the difference between a GPS app and a self-driving car:

|

Feature

|

The GPS App (Traditional AI)

|

The Self-Driving Car (Agentic AI)

|

|

Logic

|

Open-Loop: Prompt → Response.

|

Closed-Loop: Goal → Plan → Act → Observe.

|

|

Input

|

You input a destination.

|

It is given a goal/destination.

|

|

Output

|

It provides instructions.

|

It executes maneuvers.

|

|

Role

|

You are the driver.

|

The car is the driver, you are the supervisor.

|

The Core Insight: A self-driving brain isn't just an "add-on" to a car; it's deeply integrated with the steering, brakes, and sensors. Similarly, Agentic AI must be integrated into the core operational fabric, not merely placed on top.

2. The Fallacy of the "Intelligence Layer"

The danger of treating agency as a standalone intelligence layer is that it bypasses the Process Layer-the very heart of enterprise control.

The "Orphaned Action" Explained

Imagine an AI Agent tasked with "expediting a critical customer order."

1.The Mistake: The Agent, operating as an isolated layer, directly updates a database record marking the order as "shipped" and triggers a payment.

2.The Chaos: Because the Agent bypassed the formal "Shipping Workflow," the inventory count remains unchanged, the legal Bill of Lading isn't generated, and the physical shipment never leaves the warehouse. Money is transacted, but no value is delivered.

The Axiom: Agency must not operate above your processes; it must act through them.

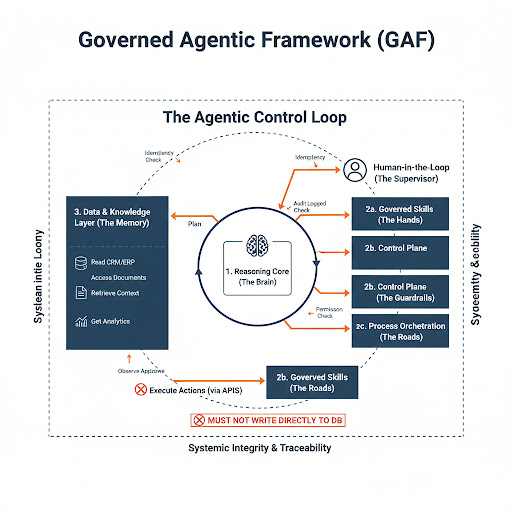

3. The Governed Agentic Framework (GAF): A Control-Theoretic Approach

Instead of a stack, we propose the Governed Agentic Framework (GAF). This model ensures that intelligence is always bounded by operational constraints.

Who Does What? A Layer-by-Layer Breakdown

The Intelligence Layer (The Brain): Translates high-level goals (e.g., "Optimize Q4 Logistics") into a step-by-step plan. It reasons and handles errors but does not touch data.

The Process Layer (The Hands): This is where "actions" happen. The agent triggers pre-validated workflows and APIs. By using existing "roads," the agent stays within the law of the business.

The Data Layer (The Memory): A read-only library. Agents use this for context and history. Agents are strictly forbidden from writing directly to this layer.

The Governance Plane (The Guardrails): Monitors permissions, logs the agent's "Chain of Thought," and provides a manual Kill Switch for human supervisors.

4. Case Study: Resilient Supply Chain Management

Scenario: A Tier-1 supplier flags a 20% shortage in semiconductor delivery.

- Passive AI (Traditional): Summarizes the email and suggests the Procurement Manager look for other vendors.

- Agentic AI (Architected):

- Perceive: Scans Bill of Lading data to identify which finished goods are at risk.

- Evaluate: Runs a "What-If" simulation in the ERP to check safety stock.

- Act: Invokes the Request_Quote_API for three pre-vetted rail providers.

- Observe: Realizes Provider A is at capacity; pivots to Provider B.

- Finalize: Drafts the Purchase Orders and sends a high-priority approval request to the manager.

5. The Redline Document: 5 Rules for Safe Agency

To build a secure, industrial-grade agentic system, enforce these architectural constraints:

NO DIRECT DATABASE WRITES: All data modifications must occur via validated APIs/Services.

NO “GOD MODE”: Agents must use Identity Propagation, inheriting the specific permissions of the human user they represent.

NO UNBOUNDED LOOPS: Every plan must have a Maximum Step Count. If an action fails repeatedly, the agent must “Pause and Escalate.”

NO “BLACK BOX” LOGIC: An agent cannot execute an action unless it has logged its “Reasoning Trace” (the why behind the move) first.

NO HIGH-VALUE AUTONOMY: Actions crossing a “Criticality Threshold” (e.g., spending >$5,000) require a Human-in-the-Loop signature.

Conclusion: Agency is a System State, Not a Feature

Agentic AI is the bridge between Data Science and Operations Research. It is where the model finally meets the mission. For the INFORMS community, the challenge is clear: we must ensure that as we add "autonomy," we do not sacrifice "integrity."

Agentic AI is not a new "floor" on your enterprise building. It is the automation of the hallways and elevators. If they aren't built into the structural frame of your architecture, the building cannot function.